While h2o only offer some fixed AI model but other With PocketPal, you can load external ai model.

Let's know about GGUF file.

These are the file which is useable in mobile device. In website like huggingface.co there are many different gguf file can be found.

There are many type of gguf file available while downloading. You will see like Q4_K_M, Q4_K_L etc. Here's explanation below,

The variations like Q4_K_M, Q4_K_L, and Q4_K_S represent different quantization variants within the same precision category. The differences lie in how the weights and model structures are optimized:

1. Q4_K_M: Medium optimization, balanced between quality and memory usage.

2. Q4_K_L: Larger or higher-quality optimization, preserving more accuracy at the cost of slightly more resources.

3. Q4_K_S: Smaller or speed-focused optimization, sacrificing some quality for better performance on limited hardware.

These are tailored to meet different hardware and task requirements.

What are some useful model.

There are many models. But here I will tell you which one is best. Here's the list below.

- Phi-3.5-mini-instruct-IQ4_XS.gguf (from Microsoft)

- Gemma-2-2b-it (from Google)

- LLama-3.1-minitron-4b (Nvidia)

Above mentioned models are censored. But here some uncensored version is Llama-3.1.

- Magpie-Align/MagpieLM-4B-SFT-v0.1

- dumping-grounds/llama-3.1-minitron-8b-width-base

- anthracite-org/magnum-v2-4b

- rasyosef/Llama-3.1-Minitron-4B-Chat

Looking for gguf in this above repository in critical. And only be found in under Quantizations sections.

My Recommendation is to use Phi-3.5-mini-instruct-IQ4_XS.

I've tested many models. Almost every models I mentioned above. But for me, I think Phi3.5 developed by Microsoft is far better and well optimized.

ChatterUI App

It's an special app for me. So I decided to write a special sections for it. Because its good. It provide some amazing feature that are helpful in using gguf model in offline. Like if the message gets stopped then you can resume where it was left. Not any others app provided this feature so its special. The app isn't available in Play store. But you can find GitHub repository.

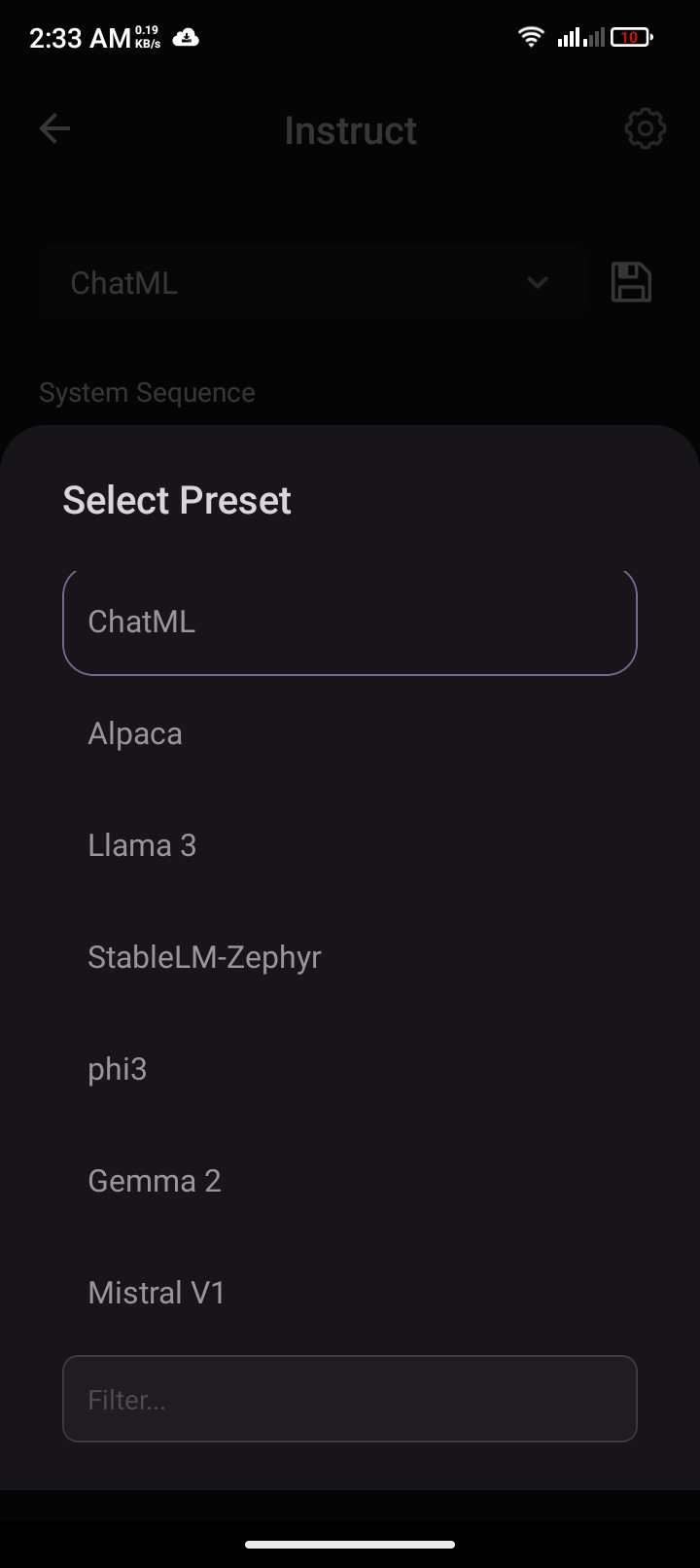

Chat Template

Make sure to use the right Chat Template. Before that I have no idea. And I was using wrong Chat Template. For that, whenever I asked something it did gave me the correct answer but afterward never stopped writing but kept writing. Chat Template help the LLM to understand where to stop and how much to write. You have to always pay the attention on what Chat Template this specific LLM are using. Although it always mentioned in there Huggingface repository.

Thank you for reading this article. Feel free to leave a comment below. Good bye.

Tags:

AI Model